Policy Stitching:

Learning Transferable Robot Policies

Conference on Robot Learning (CoRL 2023)

Overview

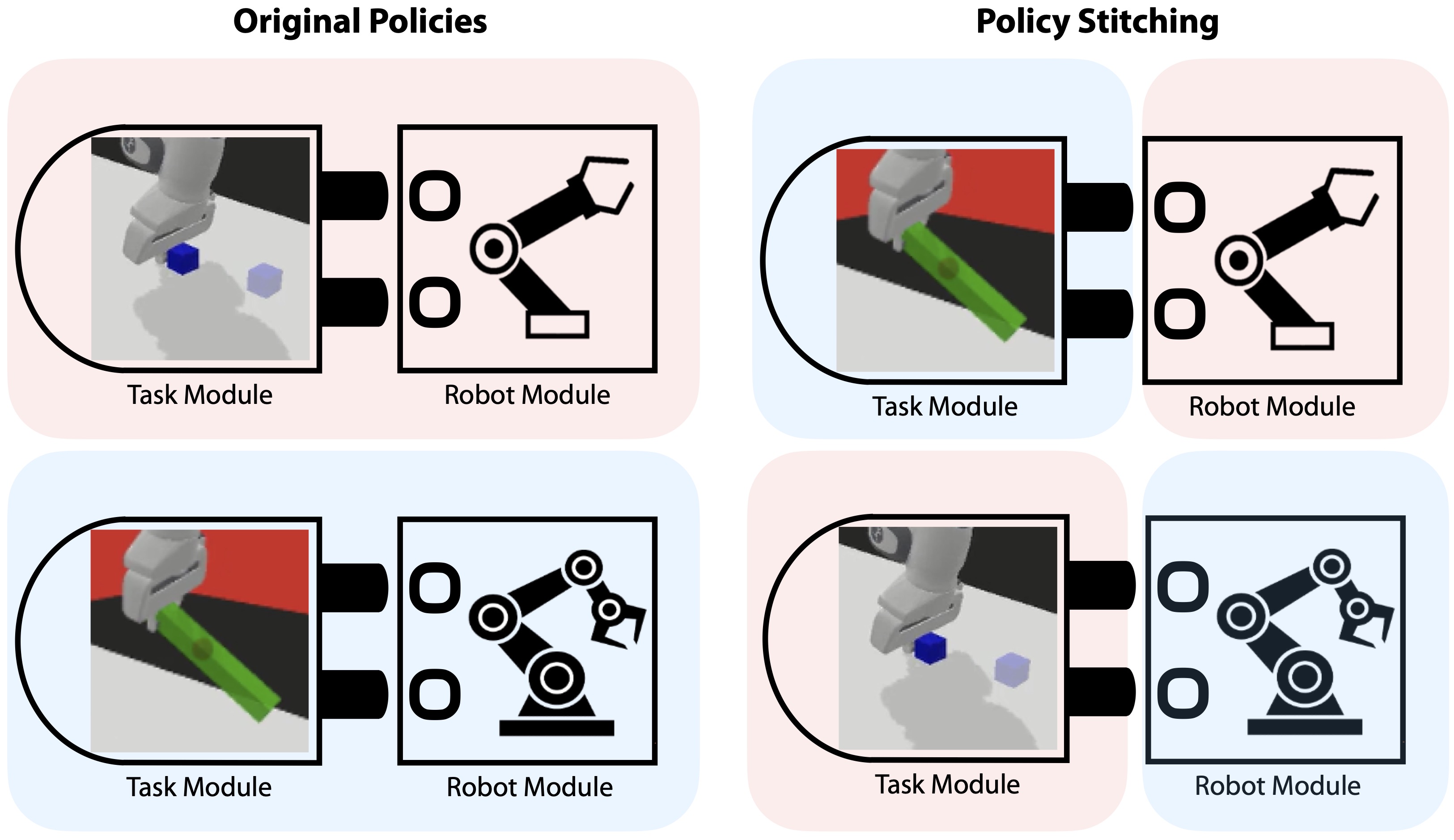

Training robots with reinforcement learning (RL) typically involves heavy interactions with the environment, and the acquired skills are often sensitive to changes in task environments and robot kinematics. Transfer RL aims to leverage previous knowledge to accelerate learning of new tasks or new body configurations. However, existing methods struggle to generalize to novel robot-task combinations and scale to realistic tasks due to complex architecture design or strong regularization that limits the capacity of the learned policy. We propose Policy Stitching, a novel framework that facilitates robot transfer learning for novel combinations of robots and tasks. Our key idea is to apply modular policy design and align the latent representations between the modular interfaces. Our method allows direct stitching of the robot and task modules trained separately to form a new policy for fast adaptation. Our simulated and real-world experiments on various 3D manipulation tasks demonstrate the superior zero-shot and few-shot transfer learning performances of our method.

Video (Click to YouTube)

Paper

Check out our paper linked here.

Codebase

Check out our codebase at https://github.com/general-robotics-duke/Policy-Stitching

Citation

@inproceedings{jian2023policy,

title={Policy Stitching: Learning Transferable Robot Policies},

author={Jian, Pingcheng and Lee, Easop and Bell, Zachary and Zavlanos, Michael M and Chen, Boyuan},

booktitle={7th Annual Conference on Robot Learning},

year={2023}

}

Acknowledgment

This work is supported in part by ARL under awards W911NF2320182 and W911NF2220113, by AFOSR under award #FA9550-19-1-0169, and by NSF under award CNS-1932011.

Contact

If you have any questions, please feel free to contact Pingcheng Jian.