CREW-Wildfire:

Benchmarking Agentic Multi-Agent Collaborations at Scale

Preprint 2025

Overview

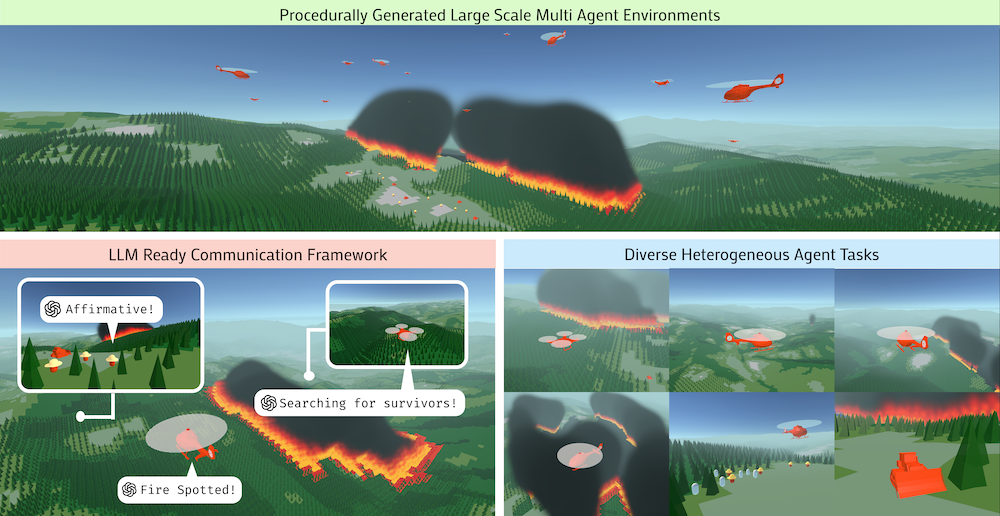

Despite rapid progress in large language model (LLM)-based multi-agent systems, current benchmarks fall short in evaluating their scalability, robustness, and coordination capabilities in complex, dynamic, real-world tasks. Existing environments typically focus on small-scale, fully observable, or low-complexity domains, limiting their utility for developing and assessing next-generation multi-agent Agentic AI frameworks. We introduce CREW-Wildfire, an open-source benchmark designed to close this gap. Built atop the human-AI teaming CREW simulation platform, CREW-Wildfire offers procedurally generated wildfire response scenarios featuring large maps, heterogeneous agents, partial observability, stochastic dynamics, and long-horizon planning objectives. The environment supports both low-level control and high-level natural language interactions through modular perception and execution modules. We implement and evaluate several state-of-the-art LLM-based multi-agent Agentic AI frameworks, uncovering significant performance gaps that highlight the unsolved challenges in large-scale coordination, communication, spatial reasoning, and long-horizon planning under uncertainty. By providing more realistic complexity, scalable architecture, and behavioral evaluation metrics, CREW-Wildfire establishes a critical foundation for advancing research in scalable multi-agent Agentic intelligence. All code, environments, data, and baselines will be released to support future research in this emerging domain.

Video (Click to YouTube)

Paper

Check out our paper linked here.

Codebase

Check out our codebase at https://github.com/generalroboticslab/CREW/tree/main/crew-algorithms/crew_algorithms/wildfire_alg. We also provide a full documentation for CREW-Wildfire at https://generalroboticslab.github.io/wildfire-docs/.

Citation

@misc{hyun2025crewwildfirebenchmarkingagenticmultiagent,

title={CREW-WILDFIRE: Benchmarking Agentic Multi-Agent Collaborations at Scale},

author={Jonathan Hyun and Nicholas R Waytowich and Boyuan Chen},

year={2025},

eprint={2507.05178},

archivePrefix={arXiv},

primaryClass={cs.MA},

url={https://arxiv.org/abs/2507.05178},

}

Acknowledgment

This work is supported by the ARL STRONG program under awards W911NF2320182, W911NF2220113, and W911NF2420215, and by gift supports from BMW and OpenAI.